Introduction

Stockfish has been considered the best chess engine in the world for years. It constantly dominates the rankings and wins virtually every tournament it enters. Each new version seems to surpass the previous one, and although growth has appeared slower over the past two years, the engine’s analysis has become increasingly precise and fast.

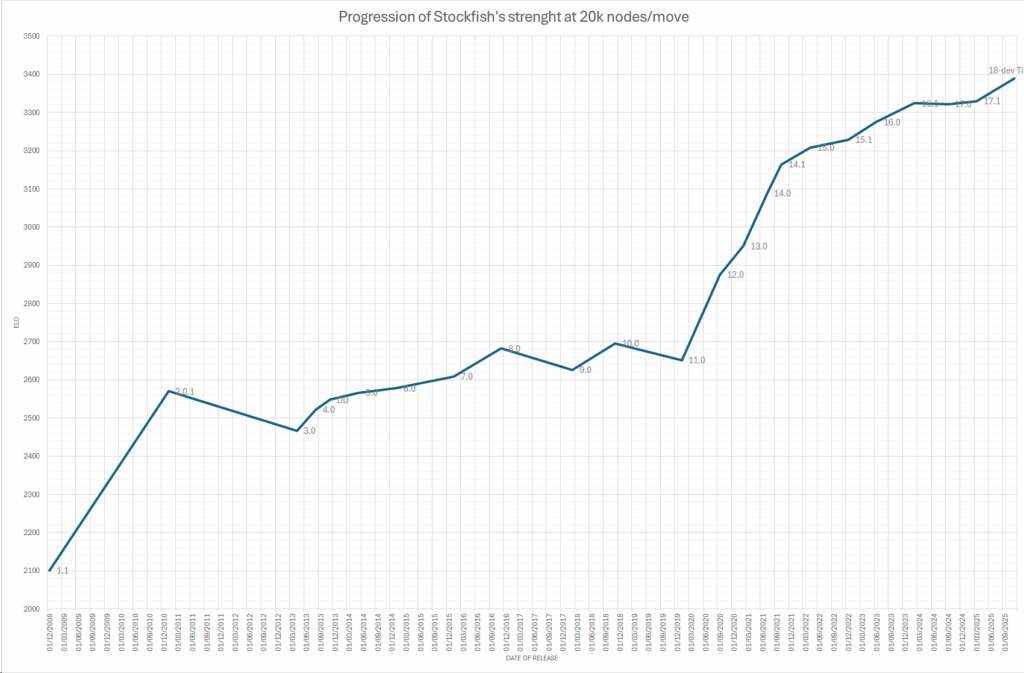

The evolution of Stockfish has not been linear: it has been a succession of algorithmic innovations, code optimisations, and, more recently, a true revolution with the introduction of NNUE neural networks.

The rating lists available online, including the one I maintain, show the strength of the engines primarily based on equal time (e.g., x seconds per move or x moves in y minutes). Although these tests measure overall strength in practical contexts, an alternative approach is to evaluate engines at fixed nodes: this method examines the efficiency of the analysis (quality of the evaluation and effectiveness of alpha-beta pruning), eliminating the variable of pure calculation speed.

For this reason, I conducted a rigorous single-core test, limited to 20,000 nodes per move, a low number, which allows for a comparison of all the main historical versions of Stockfish. Each engine explored exactly the same number of nodes before choosing a move, playing at least 5,000 games each (some nearly 15,000) to minimise statistical error. A link to the rating list of all engines tested at 20,000 nodes per move is provided at the end of the article.

To contextualise the results, Stockfish 17.1, limited to 20,000 nodes per move, was then compared against dozens of other engines, which were assigned the usual thinking time used in my tests (40 moves in 120 seconds, equivalent to about 40 moves in 120 minutes on a Pentium 90). This made it possible to obtain a score comparable to my main rating list, whose goal is to correlate the Elo scores of the engines with the FIDE scores of human players.

Results

As expected, the quality of the analysis progressively improves with the release of new versions. In almost twenty years, Stockfish has gone from an estimated Elo of 2101 (close to the level of a Candidate Master) to the superhuman strength of the current versions. The overall increase exceeds 1250 Elo points, reflecting decades of algorithmic, engineering, and training improvements. The intermediate values show a generally increasing, albeit not always linear, progression.

A turning point is the introduction of NNUE (Neural Network Updatable Efficiently) neural networks between versions 11 and 12. This development produced a significant jump in strength, especially evident around the 2020–2021 period. Even Stockfish 12, limited to just 20,000 nodes per move, would be able to beat most human GMs with ease, and subsequent versions would not look out of place in traditional time-based rating lists.

From a technical standpoint, NNUE reduced the reliance on manual evaluations, allowing the engine to capture positional patterns and piece interactions that are difficult to represent with traditional formulas. Furthermore, the efficient implementation (incremental updates and use of SIMD instructions) made it possible to achieve these improvements without resorting to dedicated GPUs, thus maintaining ease of use and portability.

Before the advent of neural networks, growth was slower and sometimes non-uniform. Some versions, while being stronger overall, achieved lower Elo scores in fixed-node tests (e.g., Stockfish 9 and 11 compared to versions 8.0 and 10.0). This clearly indicates that optimisations related to calculation speed more than compensated for less efficient evaluations.

The new TI network

Over the past two years, progress has noticeably slowed. Starting from version 15, the Elo increments have been more modest. However, recently, a development version of Stockfish 18 introduced a new type of NNUE network, which considers not only the position of the pieces but also potential threats. Being about 50% larger, it requires greater computing capacity and reduces the number of nodes calculated per second.

Although it is computationally heavier, the fixed-node analysis shows a gain of about 60 Elo compared to Stockfish 17.1, suggesting significant potential for this new innovation.

Conclusion

In approximately 18 years, Stockfish has enormously improved its positional evaluation, gaining over 1250 Elo points. The two main causes are:

-

Fundamental innovations in the evaluation function, with NNUE and its evolution, which have generated significant leaps in strength.

-

Search and engineering optimisations (tuning, pruning, move ordering, hardware optimisations), which have improved the quality of decisions for the same number of nodes.

To give an idea of the impressive progress: Deep Blue, the supercomputer that beat Kasparov in 1997, analysed an average of 200 million moves per second, taking between 60 and 120 seconds for each move, thus exploring between 12 and 24 billion nodes per move, and had an estimated Elo of 2800. Modern versions of Stockfish achieve better results by analysing just 20,000 nodes per move, a difference of several orders of magnitude that highlights the technological leap achieved.

Rating list: Stockfish 20knodes