With the new Stockfish version just released, the already strongest engine on Earth has raised the bar a bit more higher. The new version is about 30 elo stronger on long time matches, and sits again at the first place in all ratings lists (CCRL, CEGT, FastGm, in attesa quella di SSDF). 3500, 3550, 3600 elo and more…

Therefore I’ve started asking myself what the real strenght of the engine would be at different number of nodes for each move, and what is the limit under a normal human will start to have again some chance of winning.

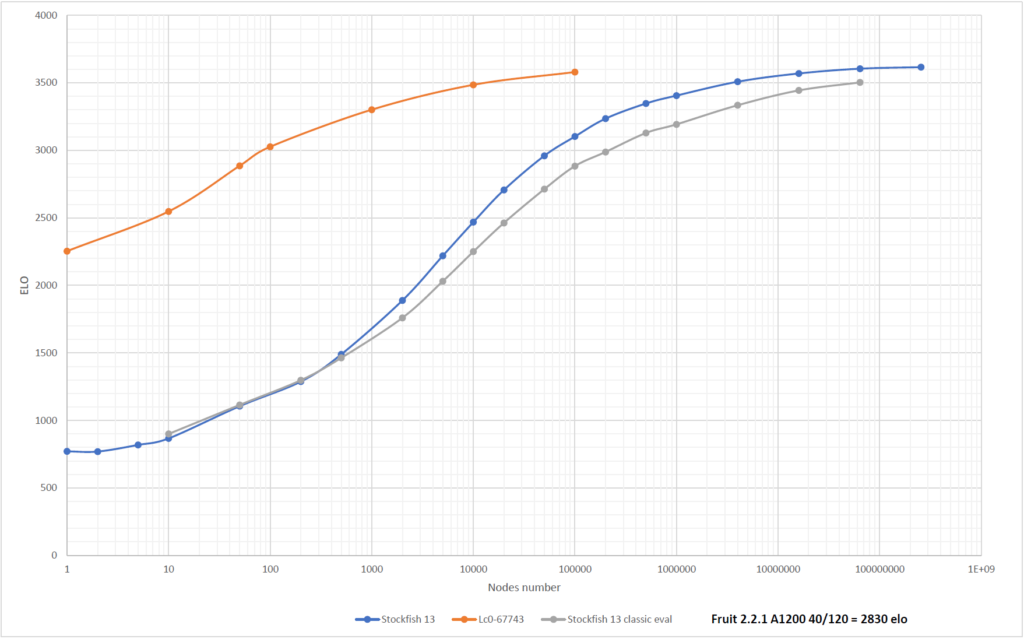

The graph, made by plotting the number of nodes per move starting from a minimum of 1 up to 256.000.000 (with single core), is the following: (click on the image to open it at full screen):

In oder to compare the Elo score with a human scale (for example FIDE Scale), or, at least, in order to try to do so, I’ve used the SSDF rating list, which is active for more than 20 years and it is the only I know that made test based on tournament time (40 moves in 120 minutes), with different hardware and with a scale calibrated on hundreds of matches between computer and humans. Moreover, I’ve searched for an engine that was not too strong, easily downloadable from the net, and stable during the matches. In the end, I’ve chosen Fruit 2.2.1, which 20 years ago, with the old Athlon Thunderbird 1200, got the remarkable score of 2830 Elo, a value similar to the one made by Deep Blue when it beat Kasparov. Because my modern PC is more or less 6 times faster than an Athlon 1200 in a single thread (equivalent to 40 moves in 19 minutes), I made a tournament between Fruit with reduced time and Stockfish 13, increasing the number of nodes/move of Stockfish. When Fruit proved itself too strong or too weak, I made other matches clashing Stockfish against itself at a different number of nodes/move (for example Sf limited to 5000 nodes/move against 10000, 20000, and 50000).

To calculate the Elo ratings of the engines I used Miguel Ballicora’s Ordo and CuteChess to manage the matches. I’ve also used the opening suite TopGM_6move.epd, containing more than 6000 different opening played by grandmasters, found around the Web. In total were played more than 110000 matches, even if most of them were played with a very low number of nodes/move (less than 500000).

From the graph, it can be seen that less than 100000 are sufficient to allow Stockfish to defeat Fruit or an equivalent top rating human player (a fraction of a second with modern PC), and less than 1000 (!!!) to defeat an average/good club player. As a comparison, Deep Blue defeated Kasparov in 1997 by analyzing an average of 200 million nodes/move. Continuing the analysis, it can be seen that as the number of nodes double, the corresponding increase in terms of Elo, decreases more and more until it almost flattens out (diminishing returns). Its worth noting that matches with 256M nodes are limited to few hundreds, therefore the error margin, in this case, is bigger. But the trend seems quite clear.

I’ve also asked myself how Stockfish 13 would have performed with the NNUE neural network disabled (therefore evaluation the position by means of the classic algorithm used up to Stockfish 11), and how Lc0 scales. In the first case, it can be seen that for a very low number of nodes (less than 700-800), the “classic” Stockfish evaluation function is on par with the NNUE version and even better sometimes; however this advantage quickly disappear increasing the number of nodes, until it is outperformed, at the point that at very high nodes count, the NNUE version will have the same Elo rating of the classic eval function, but with a number of nodes 16 times lower. This corresponds to about 100 Elo difference with 64M of nodes/move.

It should be noted, however, that the classic evaluation function is about two times faster than the NNUE version, therefore during real matches (same time to think for both versions), the gap is lower (50-60 Elo).

In the case of Lc0 the situation is still more surprising. For those who don’t know, Lc0 is a chess engine that combines a search based on Montecarlo method (MTCS) with a self-learning neural network, and the project is inspired by the AlphaZero program made by Google/DeepMind. This is a different approach to Stockfish, which uses a classic minimax algorithm with alfa-beta pruning, and with the NNUE neural network used only for the evaluation. Lc0, with only 1 node/move (therefore without any knowledge of the opponent’s counterplay) is above 2200 Elo points, more than enough to create troubles even to a human master. 100 nodes/move are enough to annihilate any human player on the planet (around 3000 Elo points).

From the graph above it can be seen that Lc0 scales better than Stockfish 13 increasing the number of nodes, even if when the number of nodes exceeds 10000/move, the inflection of the curve appears accentuated up to nearly flattens out. Unfortunately, the hardware requests to use Lc0 properly are huge and It was impossible for me to test the program over 100000 nodes/move (the MTCS algorithm is a thousand times slower than minimax and even with a good Nvidia GPU it is not possible to going too far). From the first impressions, supported also by the recent victories of Stockfish over Lc0 in the TCEC and other tournaments, is that the flexion is real and that Stockfish remains a notch above even with great hardware advantage for Lc0, on long times. I’ve used the net 67743, which at the time of the test was one of the latest.

Very wide and interesting subject that you processed fine.

To reduce ELO of an engine there is many way are existing :

– Blur/random eval function : easy to do but it give a very bad playing feeling and i could explain why with many real examples and explanation long to do here. More-ever it is not a green ecologic way (waste CPU time for nothing)

– Lower the speed calculation (like it is done by Texel chess engine)

– A combination of this 2 way (like Texel too)

– NNUE training like Maia (on Lichess) but again i can demonstrate that is not human

– Make basic the eval function (only material + some little things)

– Nodes or/and Depth limitation : my favorite (but there is note a linear link in between this 2 way) and i don’t know how to define the good way

What it is interesting is that i meet the same result for 1500 ELO = 1000 nodes (my personal ELO is around 1500).

I test many engine on Lichess and i’m ready to help for this.

I remember when i played against dedicated computer during the 80′ when commercial dedicated computer reach 1500-1900 ELO (my ELO was 1750) and i find a specific anti-computer trap to win. when 90′ arrive my trap was not working any more

Limiting ELO for computer is not to making them error (blurring eval or bad NNUE) because of the bad feeling.

I try to test SF15 with NNUE eval activated with depth=4 : i observe a 1700-1900 ELO

My chess player feeling observing it playing : it look like an experimented chess player that is missing sometime tactical complex things

My favorite engine is Ethereal : very few contributor and a ELO that is not far from SF (with 10000 contributors ?) : Andy get a very limpid economic coding C style giving small and efficiently speed result.

ELO_1500 is nickname of my bot account on Lichess

JeromeBGM is my human own account

See my own winning game against maia and specific the last 8 ply of my wining games

example : https://lichess.org/W0dDYuAL1kl0

12 .Qxg5 will never play by a standard eval computer : it will try to escape the mat at any prize

Regards

feel free to mail me

Jérôme

Hi Jerome,

thanks for your comment.

I agree with your comment, different solutions exists to lower ELO of an engine. Personally, I’ve always found difficult to imitate human play by weaking super strong engines, and I prefer to play with older engines or engines who are very weak. I’m not a strong player myself but I found this approach more enjoyable. When I was developing Naraku as hobby many years ago, I’ve tried to imitate human behavior by weakining the engine using essentially a combination of eval simplification, speed reducing and parameters optimization by means of a genetic algorithm with an objective function targetted to reproduce selected human games, trying to weaken the engine around the elo levels I’ve included in the program, but the results were not very good, the program still played too artificial.

However, I’ve made an update to the SF vs Lc0 node test, testing SF 15 vs Lc0: https://www.melonimarco.it/2022/06/12/stockfish-15-e-lc0-test-al-variare-del-numero-dei-nodi

But the page at the moment is only in Italian.

Could you explain to me how you limited the nodes per move? I can’t find it on Fritz.

I’ve used the program CuteChess (the command line version), which has the option to limit the number of nodes per move of each program. All the program tested here (Stockfish, Lc0, and Fruit) also accepts as input the possibility to move after a certain number of nodes has been calculated (which value is passed as input by CuteChess).